AI Tokens Are the Missing Rail for Decentralized Inference – Here’s the Data

Share:

The convergence of crypto and artificial intelligence (AI) is powering a number of real-world use cases. One of the most recent examples of this is the rise of decentralized networks to train AI models.

Projects such as Bittensor, Gensyn, SingularityNET, and others are currently proving how decentralized GPU compute power can be used for inference training. Inference is what powers applications like chatbots, agents, or code assistants. This is also known as the stage where an AI model puts its “learned knowledge” into action.

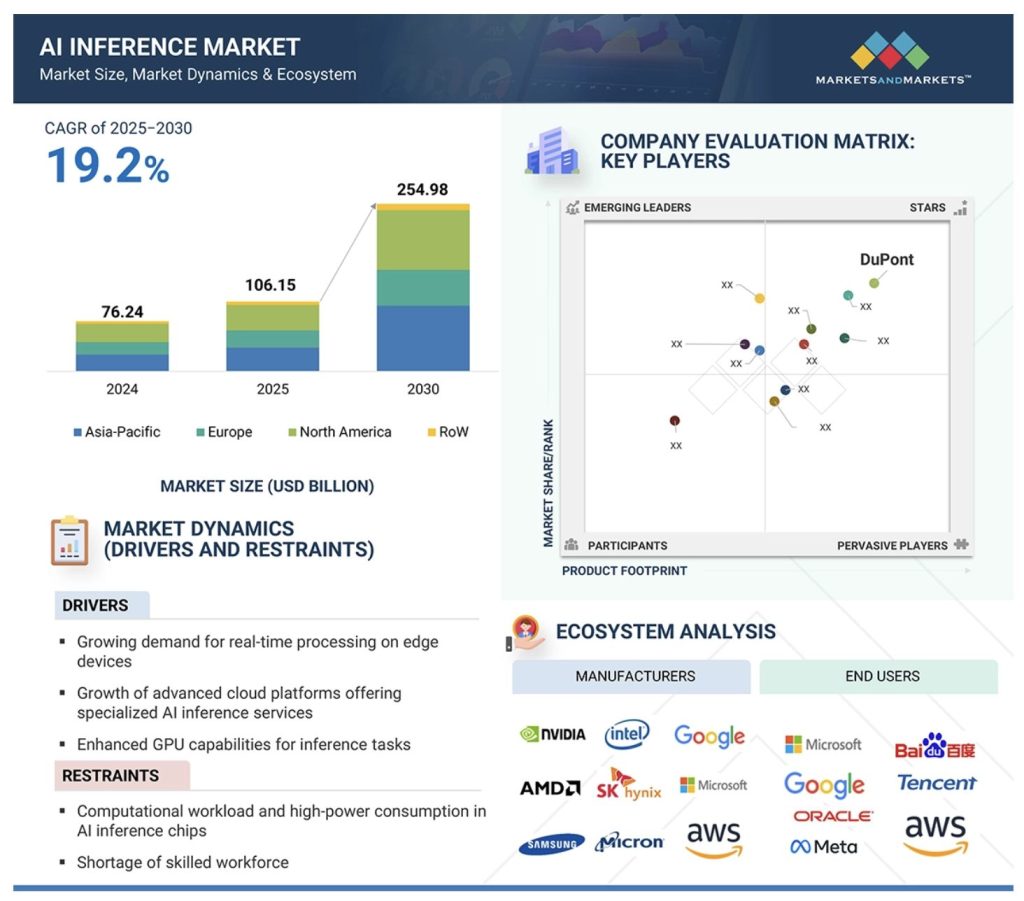

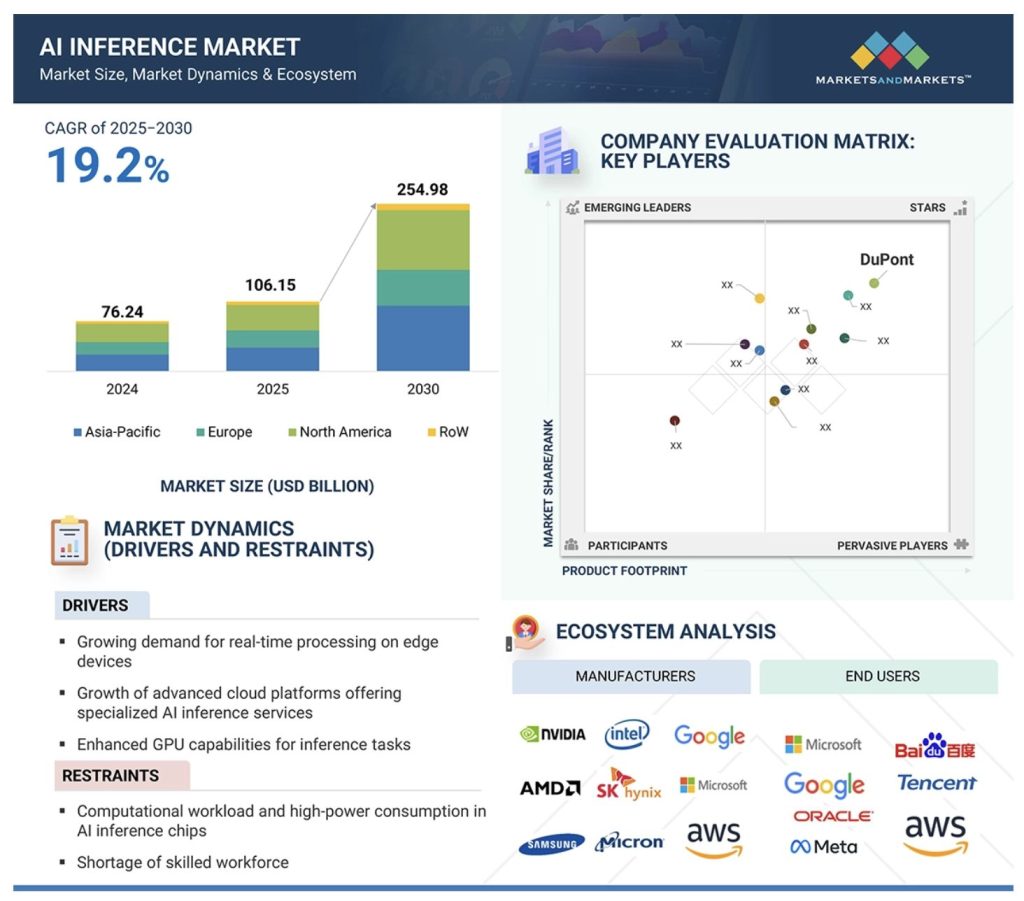

Inference has become tremendously important as AI models gain traction. According to recent data, the AI inference market is experiencing rapid growth, with some reports estimating a market value of $76.25 billion this year. This market is projected to reach $349.49 billion by 2032.

Additionally, a majority of AI training models continue to be developed by centralized AI labs such as OpenAI, Anthropic, Meta, Google, and xAI. Fortunately, this narrative is changing as decentralized networks used to train AI models advance.

The Role of AI Tokens for Inference Training

Decentralized inference training differs greatly from traditional methods, but one of the key differentiators is that incentive mechanisms in the form of tokens are used.

Luke Gniwecki, head of AI compute and blockchain product for SingularityNET and CUDOS, told Cryptonews that decentralized inference networks require economic coordination without centralized billing, trust, or custody. “Tokens provide that coordination,” he said.

Gniwecki elaborated that “AI tokens” allow permissionless access. This means that anyone can consume compute using a Web3 wallet, without relying on traditional payment processors.

He added that AI tokens allow transparent metering, or pricing that can be measured per token of inference, rather than per opaque cloud subscription.

“Demand for AI services also directly increases token utility and the network value,” Gniwecki pointed out. “Moreover, multiple node operators can be rewarded fairly for verifiable compute contributions.”

AI Tokens in Action: $FET and ASI Token

To put this in perspective, Gniwecki explained that ASI:Cloud is a high-performance cloud platform focused on inference and AI workloads. He explained that ASI:Cloud provides token-based access to popular training models, as well as access to a wide range of global GPU infrastructure.

“ASI:Cloud was developed by CUDOS in collaboration with SingularityNET, which is a marketplace for AI services and inference where users can query decentralized AI models. ASI:Cloud uses the ASI ‘$FET’ token to coordinate access, billing, and incentives within this distributed inference network,” Gniwecki said.

For example, the $FET token powers “Inference-as-a-Service,” which is an AI compute layer where developers pay per token of model output to run AI workloads on globally distributed GPU clusters.

“Each node contributes compute capacity managed by CUDOS, while SingularityNET provides the inference backend, routing, and optimization stack,” Gniwecki added.

Regarding incentives, Gniwecki noted that the “ASI token” is used for payments across the platform. The ASI token is the primary cryptocurrency for the Artificial Superintelligence Alliance, which is a decentralized AI ecosystem formed by projects including Fetch.ai, SingularityNET, and CUDOS.

“The ASI token shows how inference costs are tracked and paid across different infrastructure providers,” Gniwecki commented.

TAO and Bittensor

Bittensor is doing another interesting use case. Bittensor is a decentralized AI network that allows developers, miners, and validators to contribute machine learning models and data on-chain.

“TAO” is the native token behind Bittensor. Contributors earn TAO when their work is considered useful via the “proof‑of‑intelligence” consensus mechanism. TAO’s supply is capped at 21 million tokens, and halvings also occur about every four years.

Karia Samaroo, CEO of publicly traded digital asset company xTAO, told Cryptonews that xTAO seeks to accelerate the growth of Bittensor by holding and staking TAO. According to Samaroo, xTAO is one of the network’s leading validators.

“Bittensor is building an open marketplace for machine intelligence, or a network where anyone can contribute models and be rewarded directly in TAO for the value they provide,” Samaroo explained.

Samaroo further believes that TAO functions as the economic engine of the entire Bittensor system, as it measures, incentivizes, and secures intelligence across the Bittensor network.

For instance, Samaroo explained that TAO coordinates open computation and intelligence across thousands of independent nodes without a central authority.

“Traditional AI depends on closed data centers owned by a few large companies. Bittensor flips that model, as TAO creates an open, global market where anyone can contribute compute, models, or data and be compensated directly based on performance. TAO decentralizes intelligence itself, creating an incentive layer that keeps AI open, distributed, and censorship-resistant,” he explained.

Other Decentralized Inference Models Using AI Tokens

Gensyn is yet another protocol supporting decentralized machine learning. Gensyn was early to the sector, publishing its first litepaper laying out a framework for decentralized training in February 2022.

Today, Gensyn connects data, compute, and capital into a single verifiable network. This allows users to build powerful AI systems across a global substrate of devices. Gensyn is currently running its testnet.

Jeff Amico, COO of Gensyn, told Cryptonews that the network will soon use a native token to coordinate resources, enhance security, and align incentives among participants.

“Well-designed tokens help coordinate value, trust, and verification in a decentralized machine learning network,” Amico said. “They are a common unit of exchange among participants who don’t know or trust one another, but want to transact.”

In addition, Akash Network is providing decentralized cloud computing that can be used to deploy and run AI inference models. The majority of AI applications deployed on Akash leverage GPUs for inference. Specifically, apps like Venice.ai, a privacy-first alternative to ChatGPT, utilize Akash for hosting advanced AI models.

“AKT” is the native token for the Akash blockchain. Users of Akash pay in AKT to use the network, while providers get paid in AKT. Greg Osuri, founder of Akash Network, told Cryptonews that AKT secures the Akash blockchain via the proof-of-stake consensus.

“This means without the token, there is no blockchain and hence no network,” Osuri said.

He added that AKT provides payment rails and incentives to bootstrap the compute on Akash. “As you know, GPUs are in high demand, and to build an alternative network to competitors like Amazon, it’ll be impossible without the token incentives.”

Challenges Associated With AI Tokens

Although decentralized training models have shifted from an interesting concept to functioning networks, many of these projects are far from perfect.

Although this is due to a number of reasons, Galaxy’s “Decentralized AI Training” report notes that “incentives and verification lag technical innovations.” According to the document, only a handful of networks currently deliver real-time token rewards on-chain.

Gniwecki also added that challenges include reliability and latency; tokenomics balance; verification and security; and regulatory issues.

“For instance, if incentives lean too heavily toward speculation or over-rewarding supply, the network risks volatility,” he said. “ASI’s approach ties token demand directly to usage. For example, pay-per-token inference; grounding value in compute consumption rather than yield farming.”

Gniwecki further mentioned that ensuring honest computation remains a core challenge for decentralized inference. Additionally, he said that AI tokens interacting with fiat and enterprise budgets can create challenges.

“ASI solves this via dual payment rails using crypto and fiat. This stabilizes access for mainstream users while retaining decentralized settlement for crypto-native ones,” Gniwecki said.

AI Tokens Will Advance

Challenges aside, decentralized inference training models will continue to advance.

“Over the next few years, AI will shift from closed, centralized platforms to open protocols that coordinate key resources such as compute, data, and capital,” Amico noted.

He shared that Gensyn is particularly focused on driving this transition through applications like “RL Swarm,” which is a peer-to-peer reinforcement learning training system, along with BlockAssist, which is an assistant training framework.

Echoing this, Gniwecki shared that over the next year ASI:Cloud will evolve from decentralized access to programmable AI infrastructure.

“These developments will turn the ASI token into more than a payment method, but rather as a coordination tool for AI collaboration, model sharing, and autonomous agent economies. As the platform scales, inference usage is expected to surpass 3 billion processed tokens in its first 100 days, with future staking incentives tied to verified compute throughput.”

The post AI Tokens Are the Missing Rail for Decentralized Inference – Here’s the Data appeared first on Cryptonews.

AI Tokens Are the Missing Rail for Decentralized Inference – Here’s the Data

Share:

The convergence of crypto and artificial intelligence (AI) is powering a number of real-world use cases. One of the most recent examples of this is the rise of decentralized networks to train AI models.

Projects such as Bittensor, Gensyn, SingularityNET, and others are currently proving how decentralized GPU compute power can be used for inference training. Inference is what powers applications like chatbots, agents, or code assistants. This is also known as the stage where an AI model puts its “learned knowledge” into action.

Inference has become tremendously important as AI models gain traction. According to recent data, the AI inference market is experiencing rapid growth, with some reports estimating a market value of $76.25 billion this year. This market is projected to reach $349.49 billion by 2032.

Additionally, a majority of AI training models continue to be developed by centralized AI labs such as OpenAI, Anthropic, Meta, Google, and xAI. Fortunately, this narrative is changing as decentralized networks used to train AI models advance.

The Role of AI Tokens for Inference Training

Decentralized inference training differs greatly from traditional methods, but one of the key differentiators is that incentive mechanisms in the form of tokens are used.

Luke Gniwecki, head of AI compute and blockchain product for SingularityNET and CUDOS, told Cryptonews that decentralized inference networks require economic coordination without centralized billing, trust, or custody. “Tokens provide that coordination,” he said.

Gniwecki elaborated that “AI tokens” allow permissionless access. This means that anyone can consume compute using a Web3 wallet, without relying on traditional payment processors.

He added that AI tokens allow transparent metering, or pricing that can be measured per token of inference, rather than per opaque cloud subscription.

“Demand for AI services also directly increases token utility and the network value,” Gniwecki pointed out. “Moreover, multiple node operators can be rewarded fairly for verifiable compute contributions.”

AI Tokens in Action: $FET and ASI Token

To put this in perspective, Gniwecki explained that ASI:Cloud is a high-performance cloud platform focused on inference and AI workloads. He explained that ASI:Cloud provides token-based access to popular training models, as well as access to a wide range of global GPU infrastructure.

“ASI:Cloud was developed by CUDOS in collaboration with SingularityNET, which is a marketplace for AI services and inference where users can query decentralized AI models. ASI:Cloud uses the ASI ‘$FET’ token to coordinate access, billing, and incentives within this distributed inference network,” Gniwecki said.

For example, the $FET token powers “Inference-as-a-Service,” which is an AI compute layer where developers pay per token of model output to run AI workloads on globally distributed GPU clusters.

“Each node contributes compute capacity managed by CUDOS, while SingularityNET provides the inference backend, routing, and optimization stack,” Gniwecki added.

Regarding incentives, Gniwecki noted that the “ASI token” is used for payments across the platform. The ASI token is the primary cryptocurrency for the Artificial Superintelligence Alliance, which is a decentralized AI ecosystem formed by projects including Fetch.ai, SingularityNET, and CUDOS.

“The ASI token shows how inference costs are tracked and paid across different infrastructure providers,” Gniwecki commented.

TAO and Bittensor

Bittensor is doing another interesting use case. Bittensor is a decentralized AI network that allows developers, miners, and validators to contribute machine learning models and data on-chain.

“TAO” is the native token behind Bittensor. Contributors earn TAO when their work is considered useful via the “proof‑of‑intelligence” consensus mechanism. TAO’s supply is capped at 21 million tokens, and halvings also occur about every four years.

Karia Samaroo, CEO of publicly traded digital asset company xTAO, told Cryptonews that xTAO seeks to accelerate the growth of Bittensor by holding and staking TAO. According to Samaroo, xTAO is one of the network’s leading validators.

“Bittensor is building an open marketplace for machine intelligence, or a network where anyone can contribute models and be rewarded directly in TAO for the value they provide,” Samaroo explained.

Samaroo further believes that TAO functions as the economic engine of the entire Bittensor system, as it measures, incentivizes, and secures intelligence across the Bittensor network.

For instance, Samaroo explained that TAO coordinates open computation and intelligence across thousands of independent nodes without a central authority.

“Traditional AI depends on closed data centers owned by a few large companies. Bittensor flips that model, as TAO creates an open, global market where anyone can contribute compute, models, or data and be compensated directly based on performance. TAO decentralizes intelligence itself, creating an incentive layer that keeps AI open, distributed, and censorship-resistant,” he explained.

Other Decentralized Inference Models Using AI Tokens

Gensyn is yet another protocol supporting decentralized machine learning. Gensyn was early to the sector, publishing its first litepaper laying out a framework for decentralized training in February 2022.

Today, Gensyn connects data, compute, and capital into a single verifiable network. This allows users to build powerful AI systems across a global substrate of devices. Gensyn is currently running its testnet.

Jeff Amico, COO of Gensyn, told Cryptonews that the network will soon use a native token to coordinate resources, enhance security, and align incentives among participants.

“Well-designed tokens help coordinate value, trust, and verification in a decentralized machine learning network,” Amico said. “They are a common unit of exchange among participants who don’t know or trust one another, but want to transact.”

In addition, Akash Network is providing decentralized cloud computing that can be used to deploy and run AI inference models. The majority of AI applications deployed on Akash leverage GPUs for inference. Specifically, apps like Venice.ai, a privacy-first alternative to ChatGPT, utilize Akash for hosting advanced AI models.

“AKT” is the native token for the Akash blockchain. Users of Akash pay in AKT to use the network, while providers get paid in AKT. Greg Osuri, founder of Akash Network, told Cryptonews that AKT secures the Akash blockchain via the proof-of-stake consensus.

“This means without the token, there is no blockchain and hence no network,” Osuri said.

He added that AKT provides payment rails and incentives to bootstrap the compute on Akash. “As you know, GPUs are in high demand, and to build an alternative network to competitors like Amazon, it’ll be impossible without the token incentives.”

Challenges Associated With AI Tokens

Although decentralized training models have shifted from an interesting concept to functioning networks, many of these projects are far from perfect.

Although this is due to a number of reasons, Galaxy’s “Decentralized AI Training” report notes that “incentives and verification lag technical innovations.” According to the document, only a handful of networks currently deliver real-time token rewards on-chain.

Gniwecki also added that challenges include reliability and latency; tokenomics balance; verification and security; and regulatory issues.

“For instance, if incentives lean too heavily toward speculation or over-rewarding supply, the network risks volatility,” he said. “ASI’s approach ties token demand directly to usage. For example, pay-per-token inference; grounding value in compute consumption rather than yield farming.”

Gniwecki further mentioned that ensuring honest computation remains a core challenge for decentralized inference. Additionally, he said that AI tokens interacting with fiat and enterprise budgets can create challenges.

“ASI solves this via dual payment rails using crypto and fiat. This stabilizes access for mainstream users while retaining decentralized settlement for crypto-native ones,” Gniwecki said.

AI Tokens Will Advance

Challenges aside, decentralized inference training models will continue to advance.

“Over the next few years, AI will shift from closed, centralized platforms to open protocols that coordinate key resources such as compute, data, and capital,” Amico noted.

He shared that Gensyn is particularly focused on driving this transition through applications like “RL Swarm,” which is a peer-to-peer reinforcement learning training system, along with BlockAssist, which is an assistant training framework.

Echoing this, Gniwecki shared that over the next year ASI:Cloud will evolve from decentralized access to programmable AI infrastructure.

“These developments will turn the ASI token into more than a payment method, but rather as a coordination tool for AI collaboration, model sharing, and autonomous agent economies. As the platform scales, inference usage is expected to surpass 3 billion processed tokens in its first 100 days, with future staking incentives tied to verified compute throughput.”

The post AI Tokens Are the Missing Rail for Decentralized Inference – Here’s the Data appeared first on Cryptonews.